Using GPU-enabled JupyterLab on the K8s home cluster

My original plan for the home system started off fairly simple - I just wanted a machine with a GPU on it so that I could teach myself ML/DL - but as I got further into it, I realized I needed a more general-purpose development environment that was capable of doing distributed processing and running real ML pipelines, so I ended up with the three hypervisor/ESXi system running HA Kubernetes. It allows me to quickly ‘proof-of-concept’ all sorts of projects and keep up with the latest technologies. Currently, for example, I’m building out some TFX/Kubeflow pipelines (blog post about that coming soon).

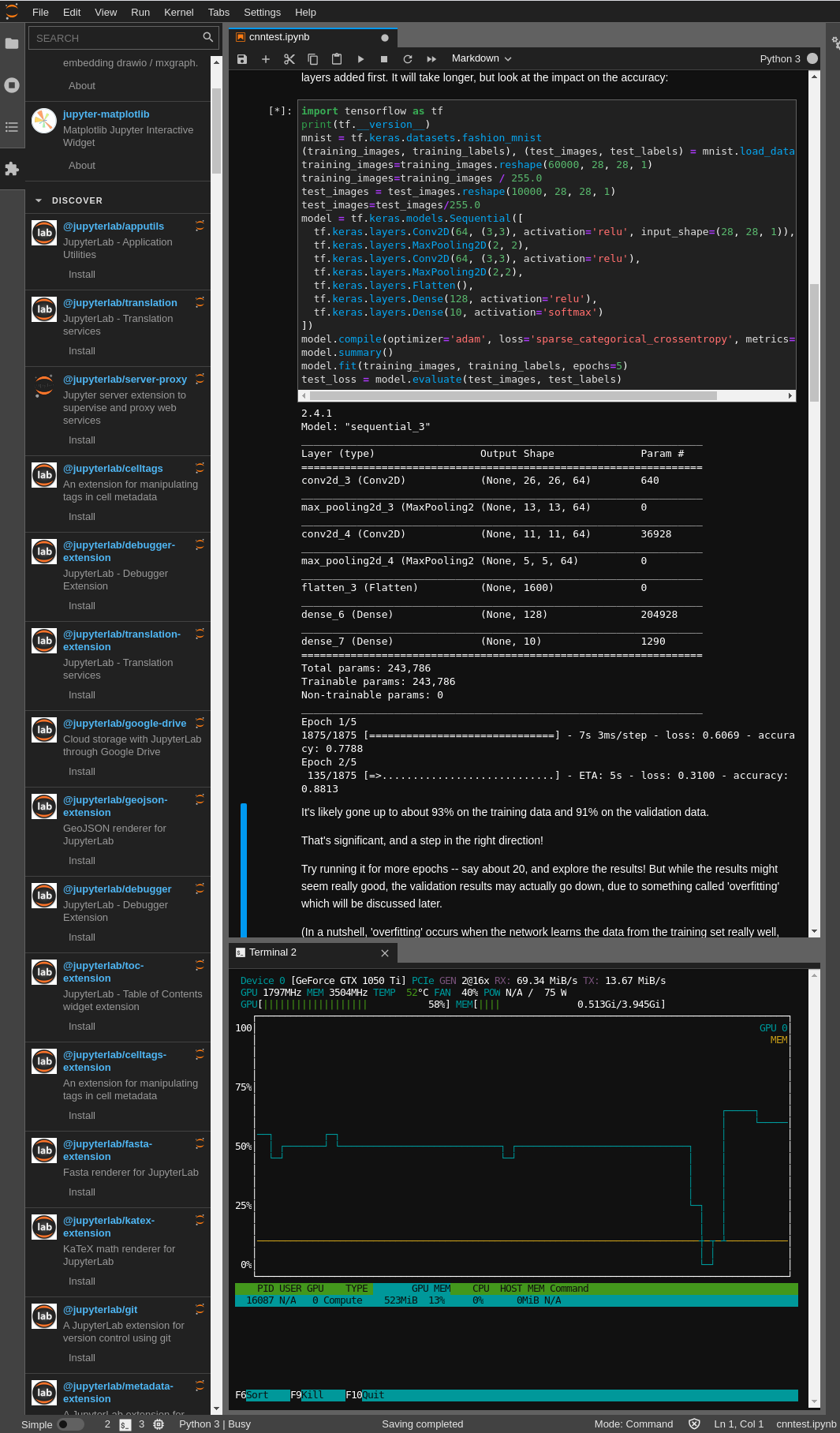

Early on I decided to use JupyterLab as my ML development environment of choice, but because now I was running on top of a hypervisor, some additional work had to be done to punch the GPU’s through to the JupyterLAB pods … but once you figure all that out, you end up with an awesome environment that looks like this:

Details to follow soon:

- creating GPU-enabled ESXi templates

- JupyterLAB ‘docker stacks’ - and how to modify them for use with GPUs

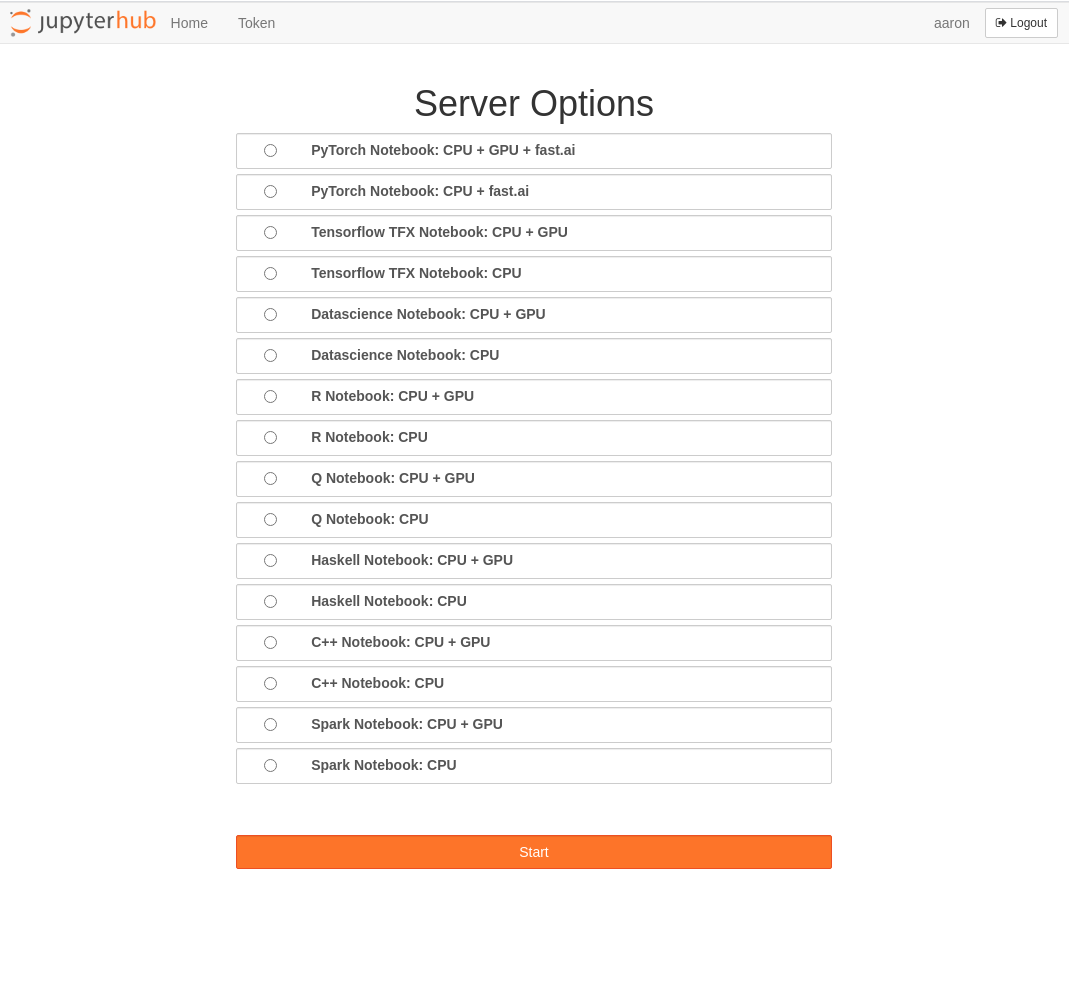

- details about docker stacks in general and how to modify jupyterLAB on k8s to support different environments:

- code repo here

Multi-environment JupyterLAB: